AI Video Generation - The Future is already here

I concluded my last AI blog post in October with “open source video generation models… will overtake closed sourced ones sooner than expected!”. Surprise: it has already happened!

In this lengthy blogpost, I want to:

Explain the enormous work that we did over the last 10 days, resulting in a funny AI movie (jump to the end if you are only here for that)

Explain the big picture of generative AI for videos

Table of Contents

Since my last blog post, a ton of generative AI video models have been released and I have tested the most important ones. To be upfront with my conclusion: Tencent’s Hunyuan Video is absolutely astonishing and will have wide impact. The last time I had this feeling was when Stable Diffusion came out in 2022, which was the foundation for successful commercial products such as Midjourney and is still being used widely (e.g. the new Mountain Dew commercials are made with it). I am convinced: Hunyuan will be for video generation what Stable Diffusion has been for image generation.

1. The Ideal Video Model

Obviously, we want video models to be expressive and generate videos of high resolution and framerate. Some not-so-obvious requirements include:

Open source: model can be downloaded and run locally

Uncensored: censored models lead to many problems

Allow the development of an ecosystem of tools around it

Following the analogy to Stable Diffusion I drew earlier: all of these were true for it and were key to why it won.

Model Censorship

In general, this is a difficult question. On the one hand, I concur that some extreme content should simply not exist at all, so models should not be able to generate it. On the other hand, over-censoring of models has some unintended side effects.

Earlier this year, Google’s image generation model Gemini was widely ridiculed when a prompt for “Nazi soldiers” would generate Black and Asian soldiers. When OpenAI released DALLE in 2022, I tested it with the prompt “My Bavarian mother unintentionally takes a selfie with her mobile phone”, also returning me a selection of ethnicities in the results, which are unlikely to match what I actually want. What a bad user experience!

This year, Stability AI released Stable Diffusion 3, which the community had been waiting for in great anticipation. It quickly turned out that this model is quite useless. The training data only contained a very small amount of lightly-dressed humans, leading to a total incomprehension of human anatomy. The prompt “woman lying on grass” quickly became a meme.

](/ai/good-bad-ugly/1_hu9c3d30bf53313f395dc9309d4b7f68f9_2061541_4736902eab9f0ecd57bf02bf8b4ee839.webp)

Ecosystem

In my last blog post, I showed how I built a pipeline out of several open source models. This ability is essential for many use cases, including professional media production and research. It also supports the creation of an ecosystem of supporting tools (more on this below).

The models developed by big Silicon Valley companies like Meta, Google, and OpenAI don’t support such use cases and probably don’t even want to; they are happy if users can modify a video they took in a café to show a Kombucha instead of a Coke or an Avocado Toast instead of a Hamburger.

An ecosystem of tools around foundation models is critical, because it allows, among other things, the very precise specification of outputs. Simply speaking, one of the most useless ways to specify the image or video that I want to be generated is by text. Much more useful are:

Controlnets: can specify output by visual constraints (colors, body poses, depth information, edge information etc.)

IPAdapters: can be used to transfer non-tangible information, such as the style of an image

LORAs: a more heavyweight approach than IPAdapters that allows much more control of styles, materials, and humans in the output

, using a LORA I had trained on my appearance and a controlnet for body pose. Right: my coworker [Huyen](https://ar-ai.org/author/huyen-nguyen/), generated using an IPAdapter conditioned with 4 photos of her.](/ai/good-bad-ugly/2_hufba5692dd20cec5e2bc1458418e9c891_1409682_c5752d928a33c7648640a778276f4fef.webp)

2. Current Video Models

Since my last AI blog post, I consider the most important models that have been released: LTX-Video (1 month ago), Hunyuan (3 weeks ago), and many additions to CogVideoX. Their main strengths are:

LTX: Lightning fast

CogVideoX: Extreme controllability

Hunyuan: Best visual quality

First, let me show an interactive session of me with LTX video, captured in real-time from my screen. The speed is absolutely mind-blowing!

The CogVideoX family stands out by how many things can be controlled about the videos that are generated, including:

Using images to specify the start & end of the video

Define trajectories of objects in the video

Exact specification of camera movements

Using LORAs, we can already specify styles like “Disney Movie”, with more coming out on a daily basis

Using a variety of controlnets

Video generation with CogVideoX using controlnet (courtesy of Kijai):

Finally: Hunyuan. I very quickly got very convinced by it. Here is the sixth test video that I ever created with it. The quality is just unbelievable for a quick test video. As input, I used Huyen’s image from Figure 2 piped through an LLM for captioning and motion instructions.

3. Case Study: AI Remake of “The Good, The Bad, and The Ugly”

The goal of this experiment was to understand Hunyuan capabilities more deeply. It took 10 intense days from start to finish. It’s important to note that if I had simply wanted to generate the best possible AI video when I started, I would have used CogVideoX, because of its strong controllability. And indeed, controllability was the main challenge in this experiment, as Hunyuan only accepted 2 types of inputs when we started:

Text prompts

Videos for specifying motion vectors

Together with my most artistic PhD student (Dávid Maruscsák), we set out to make a short AI movie with Hunyuan. Our plan of attack was:

Model exploration: understand censorship & prompting capabilities

Creative exploration: which kind of movie can we make given the technical constraints?

Production

Model Exploration

A very weird characteristic of Hunyuan is that to get the best outputs, you can’t really use natural language prompts (same issue as for e.g. Flux or LTX-Video). Instead, you must use an LLM that transforms what you want into a prompt that suits the model. Tencent very kindly also released the LLM that they are using for that, but it’s VRAM requirement is 700GB… To put this in perspective, you would need 44 of the best consumer graphics cards available right now. It seemed too heavy. Instead, we used a quantized version of Llama 3.3 that only needs 35GB VRAM, so it could fit into our H100 card.

Without LORAs, the standard trick to achieve consistency between shots is to use celebrities as actors in your AI movie. So, we started by testing 120 celebrities .

The pipeline was fully automatic using Llama and Hunyuan. First, Llama generated a list of the top-20 celebrities for certain domains (sports, music, politics, fictional characters, etc.); then, it created a prompt in the expected format for Hunyuan. We generated four 5-second videos per celebrity, resulting in 480 clips that Dávid Maruscsák watched and rated. The whole process took 8 hours on a single H100 GPU.

Armed with the results, we proceeded to explore different storylines for our movie.

Creative Exploration

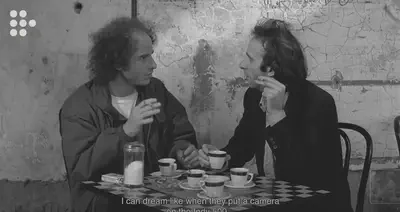

Our first idea was to remake the arthouse movie Coffee and Cigarettes. Initial results were very encouraging:

However, we quickly realized that with the currently incomplete ecosystem around Hunyuan, we can only depict 1 celebrity per shot reliably. In general, the success rate when having 2 goes down dramatically (90% => 1%).

So, we had to find a movie genre that mainly shows single actors in shots, which led us to “The Good, The Bad, and The Ugly”.

Based on our test, the celebrity that works by far the best among all is Donald Trump. So, he had to be one of them. I remembered that Donald Trump had some beef with Taylor Swift (“cat lady”), so we considered it to be a good fit to make Taylor “The Good”, as she kills “The Bad” at the end of the movie. We were only left with determining “The Ugly”. While Vladimir Putin works very well in Hunyuan and could be a good fit, we were not too keen to get to experience Novichok agent. So, we settled for Elon Musk (If you read this Elon: Please don’t disable the breaks while I am driving my car. It is only a joke! Thank you!).

Production

To be honest, it was a painful process. We had about 20 short shots that we had to generate. Only very few of them worked through a completely automated pipeline. For the others, it was more like:

Generate 16 clips with automated pipeline (~5 minutes)

Look at clips, pick best one, refine prompt

Goto 1.

But, oh boy! When it worked, it worked astonishingly well:

A few clips were completely impossible to generate, as there is a strange dependency between:

Celebrity in the shot

Activity that the celebrity is doing

Overall scene

I still don’t fully understand this point. Changing a single word in the prompt can get you from “completely unusable” to “perfect”.

It took me about 10 hours of work to generate the 20 shots. Finally, David used his great video editing skills to put everything together. Enjoy!

Conclusions Hunyuan video demonstrated great potential in this project. I also had to dive into LLMs (something that I religiously avoided before that), which was a very interesting experience! I can’t wait for Tencent to release further capabilities for their model (most importantly: image2video), as well as the open source community creating more and especially better LORAs (I found the ones that are currently available to not work very well).

Acknowledgements

Dávid Maruscsák: Creative input, video editing

Banadoco discord: Technical support and joint explorations of the model

Kijai: advanced technical support