Short Animation with Wan Video, Flux Kontext, and DeepSeek

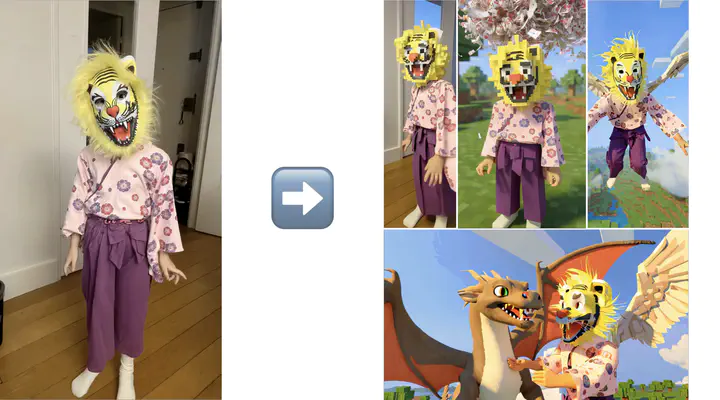

My daughter Kate (7 years old) really loves Minecraft! Together, we used several generative AI tools to create a 1-minute animation based on only 1 input photo of her. The whole project took around 20 hours of work and I learned several lessons that I want to share here.

Context

I am still trying to get used to the enormous speed with which generative AI is progressing. 6 months ago, I was blogging about my experiments with Tencent’s Hunyuan Video, which was an absolute breakthrough at that time. A lot has changed since then! The open-weights generative AI community has fully embraced Alibaba’s Wan Video as a superior replacement for Hunyuan. What makes WAN so powerful is that several extensions have been shared openly:

- Alibaba themselves have released a zoo of base models with different capabilities: text to video, image to video, first-and-last-frame to video, Wan-Fun-Control accepts a variety of conditioning inputs

- LORAs: A wide range have been trained. Compatibility between LORAs and the various base models is complicated.

- VACE: powerful control of generated videos

- CausVid and its successor SelfForcing: incredible speed gains

- …and this is just the tip of the iceberg!

Experiment Summary

My goals for this experiment were:

- Have fun with Kate and involve her as much as possible in the conception of the story

- Play with the Wan Video ecosystem to produce a 1-minute animation

- There should only be 1 explicit real-world visual input: a photo of Kate wearing a tiger mask and a pink yukata.

In total, we spent about 20 hours on the project:

- 1 hour: storyboarding

- 4 hour: workflow building

- 15 hours rendering

- 1 hour: selecting best results

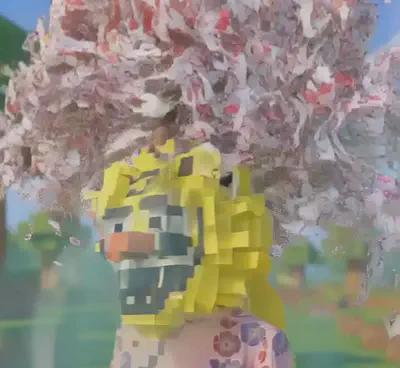

Out of the box, Wan can create clips of about 5 second length. For each of our 13 scenes, we rendered 4-8 variations (1-2 hours rendering time on an H100 GPU). We let it run over night on 2 H100s (15 GPU hours in total). Afterwards, we picked the best results among the variations, which was comparatively much quicker.

In the remainder of the article, I describe the remaining 2 phases: storyboarding & workflow building.

Storyboarding

From the top-left onwards, prompts were as simple as:

- Make the character look like a pixelated minecraft character.

- Put the character into a lush green minecraft landscape.

- The chacter turns to the side and puts their hand on the top of the head of a minecraft villager

As Black Forest Labs currently keeps the community waiting for the open weights of this model, the only way for me to use it was to pay for API access (one of the very few instances where I paid for closed-source AI rather than to do it locally). But, costs were very fair (3 EUR for making the storyboard and playing some more with it).

This phase of the project went much quicker than I would have expected, thanks to the amazing power of Flux Kontext.

Workflow Building

Next, I had to create a ComfyUI workflow that generates nice clips based on the storyboard. My first decision was to select the Wan foundation model. I opted for FLF2V-14B, which can generate clips based on the 3 inputs: the desired first and last frame, along with a text prompt describing the clip.

Next, I invested some time into evaluating speedup methods for Wan, which is notoriously slow. The by far best method at the moment is Self Forcing. Some helpful folks have extracted a simple LORA for it, which can be used in many different Wan workflows. In my tests, I could get clear and impressive speedups in the order of 5x. When iterating a low-qality preview video, it’s a huge win to wait 30 seconds (with Self Forcing) vs. 3 minutes (without).

Unfortunately, I had to find out that the Self Forcing LORA I was using is incompatible with the FLF2V-14B model. The moment I turned on Self Forcing, prompts were ignored completely. I even tried translating my prompts into Chinese, as some users have noted that it works much better with Chinese rather than English prompts; an experience I could not replicate. With hindsight, I should have now abandoned FLF2V-14B at this point and should have switched to a workflow with the I2V-14B model for image-to-video and VACE-14B for control, all acceleratable with Self Forcing. Oh well, lessons learned…

The final missing puzzle piece is: how did I get the prompt to describe what happens in the clip between first and last frame? Following a related technique that David Snow shared on Banadoco, my gameplan was:

- Florence: describe what’s in the 2 images

- LLM: Based on these 2 descriptions, generate a prompt that describes what happens in between

- Render a few quick preview videos and edit prompt by hand if needed (only about 50% of scenes needed prompt editing) This workflow worked like a charm!

The main time sink was that I fell into a little side-quest to catch up on LLMs. I tried the new contenders (DeepSeek R1-0528, Qwen 3, DeepSeek R1-0528-Qwen3-8B, etc.), based on 2 programming tests (flappy bird, balls inside a rotating heptagon). Flappy bird is too easy, and rotating heptagon is too hard (see below for how most models fail); I wonder what the sweet spot would be for testing?!

I almost liked Qwen 3, because of its fast reponse time, combined with 2 modes of operation (thinking vs. not-thinking). Unfortunately, the 32B model is somewhat underpowered, wheras the 235B model is too big to fit nicely on my H100. I wish they had a ~70B parameter model! In the end, I settled for my trusted DeepSeek-R1-Distill-Llama-70B-Q5_K_M.gguf, which has a good balance between speed and quality of replies. I still can’t express well how impressed I am with DeepSeek, raising a bad model (Llama3) to a great one!

Next, I had to slightly edit David Snow’s prompt to arrive at:

You are a creative video prompt generator specialized in crafting unique, vivid descriptions for ultra-short videos (approximately 5 seconds or 81 frames at 16fps). Your task is to generate one single highly detailed, imaginative prompt that can tell a complete visual story within this brief timeframe.You will be given a description of two images. The phrase “– end of image one –” describes the first frame of the animation. Next follows a description of the last frame of the animation, delimited by the phrase “– end of image two –”. This description is immediately followed by a prompt that describes how to create a dynamic animation.

Write a prompt to create a dynamic animation.

Avoid verbose, overly complex language and focus on simple, clear English. Prompt for interesting movements of characters and the camera. Be creative and prompt for unexpected things. 160 tokens maximum.

Be creative and unique with your transitions.

Results

The end result is not very refined, but very respectable for a first draft. Imagine that someone had shown you this video a few years ago; how amazed would you have been by the production speed and cost!

Below, I want to highlight a few scenes:

Lessons Learned

- Self Forcing is great and is strongly recommended to speed up generation time.

- Wan FLF2V-14B is a shaky model. Next time, I would rather use I2V-14B with VACE because of the incompatibility with Self Forcing

- I can’t wait for the Flux Kontext weights to be released!

- Adobe Research: Great job with Self Forcing! I can’t wait for the next iteration of your work.