Demo at ACM SIGGRAPH Real-Time Live!

A long-term dream of mine came true! At the end of July 2024, we showed a demonstration at ACM SIGGRAPH 2024 in Denver for their Real-Time Live! category.

First of all, what is ACM SIGRAPH Real-Time Live? It is a specific category at the premiere computer graphics conference ACM SIGGRAPH. While SIGGRAPH has been running every year since 1974, the Real-Time Live! category (RTL for short) has only been around for 15 years. It is very engaging, as presenters have 6 minutes to show a demonstration of real-time graphics to a large audience of computer graphics professionals (~5000 people). For me, this has always been the most fascinating event of SIGGRAPH, as it shows you the maximum of what’s possible in real-time graphics today. Also, compared to research papers, you can actually see the inventors demonstrating what they made— this makes much clearer what really works and what doesn’t.

Here are some of my favorites from recent years:

- AI & Physics-Assisted Character Pose Authoring (2022)

- Bebylon (2018)

- IQ livecoding with Shadertoy (I can’t find the video on YouTube; guess it was before they started uploading RTL to YouTube)

How did our demo come about? In December 2023, I contacted Matt Swoboda about submitting something to RTL. We were playing with this idea over the last decade, but it never materialized, until this year! Matt quickly brought in a great artist, while I could secure some sponsorship and support from Canon Japan, which led to this amazing constellation:

- Best real-time rendering engine for live events: Notch (Matt is their Co-Founder & CTO)

- Fantastic audio-visual artist: Brett Bolton (Designer of U2’s show in the Las Vegas sphere, VJ for the Grateful Dead, etc.)

- The most stressable AR demo crew: David Maruscsak and me 😊

- Best AR headset: Canon’s X1 (Sorry Apple, but you need to work much harder! Even though X1 was released 4 years before your Vision Pro, it has a much better image quality, better co-axial alignment of screens and cameras, better tracking infrastructure, easier integration with custom software, much better weight, etc; let me not even get started about Meta’s headsets…)

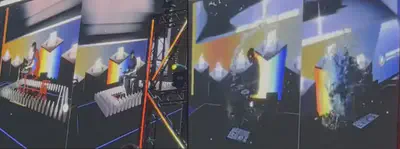

What is the concept of our demo? You can watch a concise summary of our concept in the submission video below that we sent to SIGGRAPH. The main feedback that we received from the organizers was that we should expand our demo to have 2 viewers instead of 1 in order to highlight the potential to have visuals interact with multiple viewers in 3D. Challenge accepted!

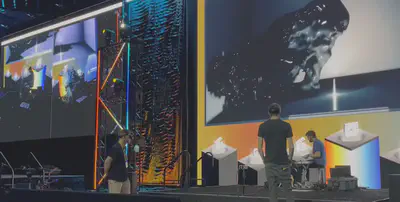

What could the audience see? Unfortunately, there were several issues with the YouTube stream of the event, so it was much harder for the remote audience to understand what was going on. Let me start by explaining what the audience in the room could see.

The setup shown in the above figure was not clear for viewers of the YouTube stream. It did not help that for the first half of our performance, the stream was only showing the view of 1 viewer.

The main challenge with our setup was controlling the lighting. Since the sensors are quite sensitive to external lighting (as well as the AR content) we did our best to control this (including working with the Siggraph AV team), but even with our best efforts we had some glitches during the final performance. That said, we still got very nice compliments from the other participants and the audience.

Showtime! After months of hard work, we were finally ready to demo! The hall filling with the audience was an awe-inspiring moment for me (I estimate about 3500 attendants).

Luckily we were the first team to present, so we could relax afterwards and enjoy the show, which contained some truly remarkable demos, including:

- The controller for Jim Henson’s muppets

- Movin Tracin’: my personal favorite. I was surprised that this demo did not get any award.

- Mesh Mortal Combat: winner of both awards (audience & jury).

You can also just watch the whole stream, or jump directly to our part.

Conclusions It was a great experience to present at Real-Time Live! I hope to return in coming years!

What we learned from this work is that there is a huge potential for having visuals at live events in AR headsets; we got encouraging feedback at the conference!

We also learned that it is very challenging, both in terms of technology, as well as in terms of having a viable business model. But, we have ideas for both. Stay tuned!

Acknowledgements First and foremost, I would like to thank Brett and Matt to participate in this project. They are exemplars of a rare breed: extremely skilled folks who put creativity before money.

Second, I would like to thank Canon for advanced support and a financial contribution to our costs.

Last, but not least, I would also like to thank the RTL organizers, who did a really great job of putting this event together! See you next year!